TIDE COMING IN

Last week I enjoyed listening to Alex McLean on a recent episode of Why We Bleep, who was talking about his live coding environment TidalCycles.

Alex is one of the driving forces of the live coding community that I have sometimes floated around the edges of, playing a few algoraves here and there. It's a great scene, friendly and inclusive and the tech they build is very cool. There was a period a while ago where I used TidalCycles quite a lot, and 65days even used it in a few places to generate some bits and pieces when we were amassing all of our noises for the No Man's Sky soundscapes.

I keep meaning to return to live coding. I have always found something very satisfying about the speed with which it allows me to create music, and the ease with which it's possible to make patterns that it would never have occurred to me to create manually.

But it has been a quite a while since I have tried it. If you follow 65daysofstatic at all, then you might have noticed that we took quite a big excursion into the world of algorithmically-driven/generative music this last decade. I guess it started with making the No Man's Sky soundtrack (2015-2016), into Decomposition Theory (2017), then the various tools we used to make A Year of Wreckage and replicr, 2019 (2019-2020) before smashing everything we learned into Wreckage Systems (2020-now).

That's actually a pretty significant span of 7 to 9 years, depending on how you count it, and by the time Wreckage Systems was built, it felt like a fitting end to this era of Adventuring in Non-Linear Composition. More recently, the desire to just 'write some songs' has very much come back to the fore.

What was also happening over that period and especially over the last couple of years has been the rise of so-called 'AI', which has really taken the shine off building autonomous or generative systems even though I strongly believe that there is a clear distinction between the kinds of generative art/tools that are being or could be used for collective, more utopian ends and the kinds of Stable Diffusion, ChatGPT snake oil AI being used to make everything worse.

Hearing Alex talk about TidalCycles and the live coding community was a good reminder that not everything involving computers-generating-art needs to be bad, and this was enough for me to head over to strudel.cc (a browser-based version of TidalCycles) and try to remember how to live code.

I stumbled my way into a nice little piano phrase, which should be embedded below, although I'm not sure how that will work if you're reading this as an email. (It is HERE if you'd like to listen - press control-enter to start it).

That was fun, but I quickly realised that it wasn't specifically live-coding or TidalCycles that had brought me here, but rather a specific thing Alex had talked about, which was Konnakol, a kind of counting used in South Indian Carnatic music - something which I otherwise know nothing about but given my fondness for time signatures that aren't 4/4 I really ought to. (This video, again found via Alex sharing it a while ago, demonstrates it brilliantly - it's much more exciting than the thumbnail might imply, give it a go):

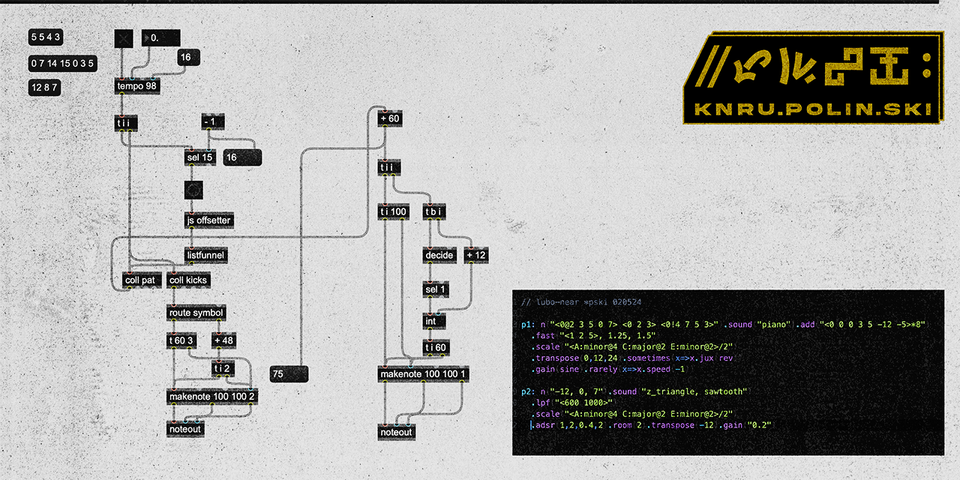

Anyway, somewhere in amongst his explaining Alex was also talking about offsetting these kinds of rhythmical patterns with every repetition, and that is what I'd got in my head that I wanted to try, but realised that I didn't actually have any idea how to do that in TidalCycles, so I made a Max patch instead which is based around a root note pattern, and a melodic pattern (which occassionally implements an alt-pattern), all of which keep off-setting/rotating based on yet another pattern. And it ended up sounding like this:

Which needs some finessing, but I kinda like it.

There's a bunch of things this has made me consider, not least being that my own compositional instincts seem to already be very much pattern-based, and it's taken me a really long time to learn how to come at music 'sound design first'. Or to put it another way my instinct, at least when it comes to writing, is to perceive music as a chain of discrete events, much like a typical pattern in TidalCycles or a melodic/rhythmic phrase, rather than as a continuous fluctuating signal. In contrast, the project I'm working on at the moment very much demands coming at composition 'sound first' and so this little sonic excursion back to making music with ordered, note-based events, no matter how complex, really highlighted the difference between the two modes. Or at least highlighted how my brain seems to perceive composition in these two distinct modalities — Could this be a new dialectic to embrace!? Fun fun.

This reminds me that I do actually have a ten minute, very much continuous signal/not-discrete noise track that has been sitting on a hard drive since forever. It doesn't really gel with the current Polinski output, but I wonder if it could maybe be a topic for a future K.N.R.U. dispatch... We shall see. But not now, as I have to climb aboard the struggle bus and try to make some music without relying on melodies.

Member discussion